我没有图像或图形方面的背景,所以请多多包涵:)

我在我的一个项目中使用JavaCV。在示例中,Frame构造了具有一定大小的缓冲区的 a 。

public void onPreviewFrame(byte[] data, Camera camera)在Android中使用该函数时,data如果声明Frame为new Frame(frameWidth, frameHeight, Frame.DEPTH_UBYTE, 2);whereframeWidth和frameHeight声明为,复制这个字节数组是没有问题的

Camera.Size previewSize = cameraParam.getPreviewSize();

int frameWidth = previewSize.width;

int frameHeight = previewSize.height;

最近,Android 添加了一种捕获屏幕的方法。自然,我想抓取这些图像并将它们转换为Frames。我修改了 Google 的示例代码以使用ImageReader。

这ImageReader被构造为ImageReader.newInstance(DISPLAY_WIDTH, DISPLAY_HEIGHT, PixelFormat.RGBA_8888, 2);。所以目前它使用 RGBA_8888 像素格式。我使用以下代码将字节复制到Frame,实例化为new Frame(DISPLAY_WIDTH, DISPLAY_HEIGHT, Frame.DEPTH_UBYTE, 2);:

ByteBuffer buffer = mImage.getPlanes()[0].getBuffer();

byte[] bytes = new byte[buffer.remaining()];

buffer.get(bytes);

mImage.close();

((ByteBuffer) frame.image[0].position(0)).put(bytes);

但这给了我一个java.nio.BufferOverflowException. 我打印了两个缓冲区的大小,帧的缓冲区大小是 691200 而bytes上面的数组是 size 1413056。弄清楚后一个数字是如何构造的失败了,因为我遇到了这个 native call。很明显,这是行不通的。

经过一番挖掘,我发现NV21 图像格式是“相机预览图像的默认格式,当没有使用 setPreviewFormat(int) 设置时”,但是ImageReader 类不支持 NV21 格式(请参阅格式参数)。所以运气不好。在文档中还写道“对于 android.hardware.camera2 API,建议将 YUV_420_888 格式用于 YUV 输出。”

所以我尝试创建一个像这样的 ImageReader ImageReader.newInstance(DISPLAY_WIDTH, DISPLAY_HEIGHT, ImageFormat.YUV_420_888, 2);,但这给了我java.lang.UnsupportedOperationException: The producer output buffer format 0x1 doesn't match the ImageReader's configured buffer format 0x23.,所以这也行不通。

作为最后的手段,我尝试使用例如这篇文章将 RGBA_8888 转换为 YUV ,但我不明白如何根据答案获得int[] rgba答案。

那么,TL;DR我怎样才能获得 NV21 图像数据,就像你在 Android 的public void onPreviewFrame(byte[] data, Camera camera)相机功能中获得的一样,以实例化我Frame并使用 Android 的 ImageReader(和媒体投影)使用它?

编辑(25-10-2016)

我创建了以下可运行的转换以从 RGBA 转换为 NV21 格式:

private class updateImage implements Runnable {

private final Image mImage;

public updateImage(Image image) {

mImage = image;

}

@Override

public void run() {

int mWidth = mImage.getWidth();

int mHeight = mImage.getHeight();

// Four bytes per pixel: width * height * 4.

byte[] rgbaBytes = new byte[mWidth * mHeight * 4];

// put the data into the rgbaBytes array.

mImage.getPlanes()[0].getBuffer().get(rgbaBytes);

mImage.close(); // Access to the image is no longer needed, release it.

// Create a yuv byte array: width * height * 1.5 ().

byte[] yuv = new byte[mWidth * mHeight * 3 / 2];

RGBtoNV21(yuv, rgbaBytes, mWidth, mHeight);

((ByteBuffer) yuvImage.image[0].position(0)).put(yuv);

}

void RGBtoNV21(byte[] yuv420sp, byte[] argb, int width, int height) {

final int frameSize = width * height;

int yIndex = 0;

int uvIndex = frameSize;

int A, R, G, B, Y, U, V;

int index = 0;

int rgbIndex = 0;

for (int i = 0; i < height; i++) {

for (int j = 0; j < width; j++) {

R = argb[rgbIndex++];

G = argb[rgbIndex++];

B = argb[rgbIndex++];

A = argb[rgbIndex++]; // Ignored right now.

// RGB to YUV conversion according to

// https://en.wikipedia.org/wiki/YUV#Y.E2.80.B2UV444_to_RGB888_conversion

Y = ((66 * R + 129 * G + 25 * B + 128) >> 8) + 16;

U = ((-38 * R - 74 * G + 112 * B + 128) >> 8) + 128;

V = ((112 * R - 94 * G - 18 * B + 128) >> 8) + 128;

// NV21 has a plane of Y and interleaved planes of VU each sampled by a factor

// of 2 meaning for every 4 Y pixels there are 1 V and 1 U.

// Note the sampling is every other pixel AND every other scanline.

yuv420sp[yIndex++] = (byte) ((Y < 0) ? 0 : ((Y > 255) ? 255 : Y));

if (i % 2 == 0 && index % 2 == 0) {

yuv420sp[uvIndex++] = (byte) ((V < 0) ? 0 : ((V > 255) ? 255 : V));

yuv420sp[uvIndex++] = (byte) ((U < 0) ? 0 : ((U > 255) ? 255 : U));

}

index++;

}

}

}

}

yuvImage对象初始化为yuvImage = new Frame(DISPLAY_WIDTH, DISPLAY_HEIGHT, Frame.DEPTH_UBYTE, 2);,DISPLAY_WIDTH和DISPLAY_HEIGHT只是指定显示大小的两个整数。这是后台处理程序处理 onImageReady 的代码:

private final ImageReader.OnImageAvailableListener mOnImageAvailableListener

= new ImageReader.OnImageAvailableListener() {

@Override

public void onImageAvailable(ImageReader reader) {

mBackgroundHandler.post(new updateImage(reader.acquireNextImage()));

}

};

...

mImageReader = ImageReader.newInstance(DISPLAY_WIDTH, DISPLAY_HEIGHT, PixelFormat.RGBA_8888, 2);

mImageReader.setOnImageAvailableListener(mOnImageAvailableListener, mBackgroundHandler);

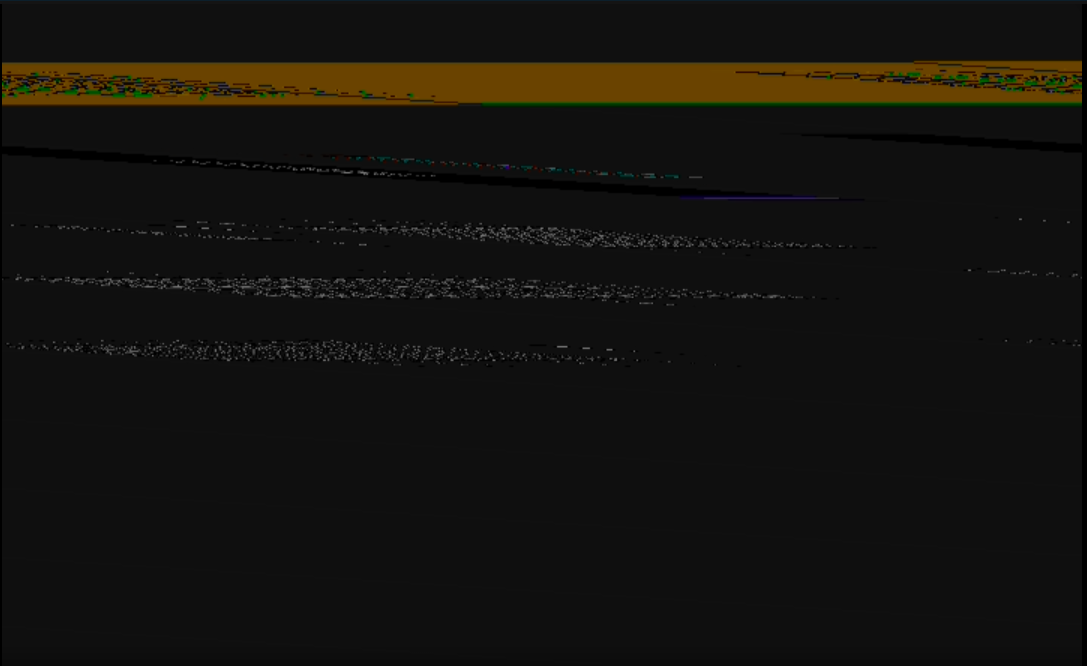

这些方法有效,我至少没有收到任何错误,但输出图像格式错误。我的转换出了什么问题?正在创建的示例图像:

编辑(15-11-2016)

我已将RGBtoNV21函数修改为以下内容:

void RGBtoNV21(byte[] yuv420sp, int width, int height) {

try {

final int frameSize = width * height;

int yIndex = 0;

int uvIndex = frameSize;

int pixelStride = mImage.getPlanes()[0].getPixelStride();

int rowStride = mImage.getPlanes()[0].getRowStride();

int rowPadding = rowStride - pixelStride * width;

ByteBuffer buffer = mImage.getPlanes()[0].getBuffer();

Bitmap bitmap = Bitmap.createBitmap(getResources().getDisplayMetrics(), width, height, Bitmap.Config.ARGB_8888);

int A, R, G, B, Y, U, V;

int offset = 0;

for (int i = 0; i < height; i++) {

for (int j = 0; j < width; j++) {

// Useful link: https://stackoverflow.com/questions/26673127/android-imagereader-acquirelatestimage-returns-invalid-jpg

R = (buffer.get(offset) & 0xff) << 16; // R

G = (buffer.get(offset + 1) & 0xff) << 8; // G

B = (buffer.get(offset + 2) & 0xff); // B

A = (buffer.get(offset + 3) & 0xff) << 24; // A

offset += pixelStride;

int pixel = 0;

pixel |= R; // R

pixel |= G; // G

pixel |= B; // B

pixel |= A; // A

bitmap.setPixel(j, i, pixel);

// RGB to YUV conversion according to

// https://en.wikipedia.org/wiki/YUV#Y.E2.80.B2UV444_to_RGB888_conversion

// Y = ((66 * R + 129 * G + 25 * B + 128) >> 8) + 16;

// U = ((-38 * R - 74 * G + 112 * B + 128) >> 8) + 128;

// V = ((112 * R - 94 * G - 18 * B + 128) >> 8) + 128;

Y = (int) Math.round(R * .299000 + G * .587000 + B * .114000);

U = (int) Math.round(R * -.168736 + G * -.331264 + B * .500000 + 128);

V = (int) Math.round(R * .500000 + G * -.418688 + B * -.081312 + 128);

// NV21 has a plane of Y and interleaved planes of VU each sampled by a factor

// of 2 meaning for every 4 Y pixels there are 1 V and 1 U.

// Note the sampling is every other pixel AND every other scanline.

yuv420sp[yIndex++] = (byte) ((Y < 0) ? 0 : ((Y > 255) ? 255 : Y));

if (i % 2 == 0 && j % 2 == 0) {

yuv420sp[uvIndex++] = (byte) ((V < 0) ? 0 : ((V > 255) ? 255 : V));

yuv420sp[uvIndex++] = (byte) ((U < 0) ? 0 : ((U > 255) ? 255 : U));

}

}

offset += rowPadding;

}

File file = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_PICTURES).getAbsolutePath(), "/Awesomebitmap.png");

FileOutputStream fos = new FileOutputStream(file);

bitmap.compress(Bitmap.CompressFormat.PNG, 100, fos);

} catch (Exception e) {

Timber.e(e, "Converting image to NV21 went wrong.");

}

}

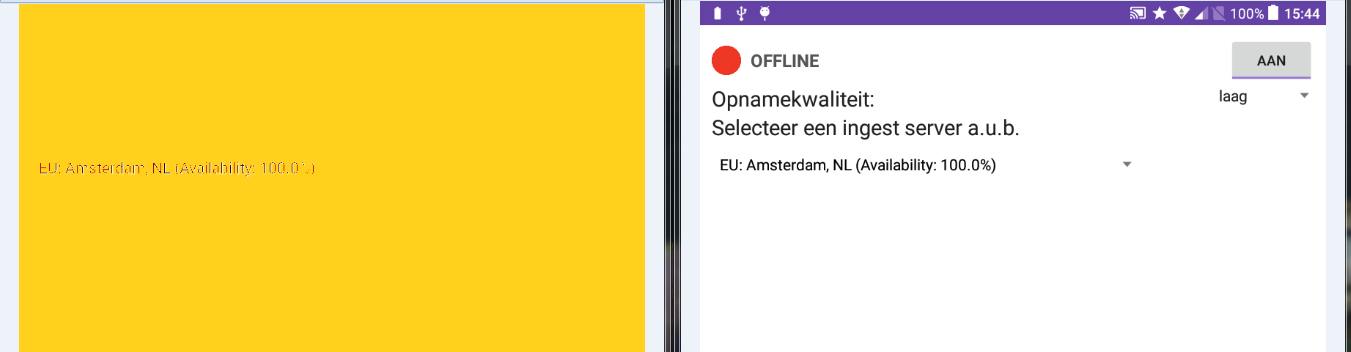

现在图像不再畸形,但色度已关闭。

右侧是在该循环中创建的位图,左侧是保存到图像的 NV21。所以RGB像素被正确处理。显然色度已关闭,但 RGB 到 YUV 的转换应该与维基百科描述的相同。这里有什么问题?