我知道可以将超参数作为字典发送到 Trains。

但它也可以自动记录使用 TF2 HParams 模块记录的超参数吗?

编辑:这是在HParams 教程中使用hp.hparams(hparams).

Disclaimer: I'm part of the allegro.ai Trains team

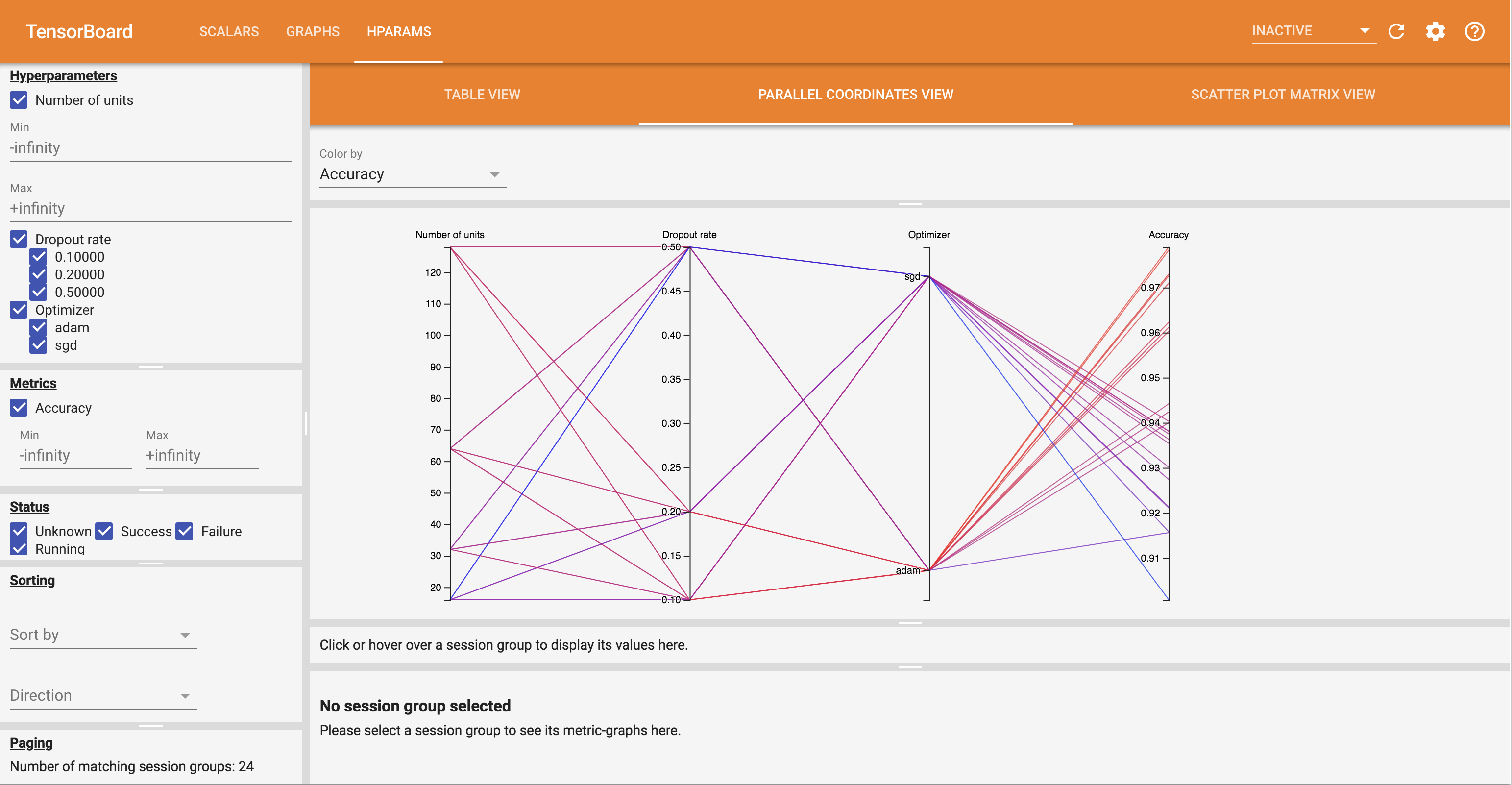

From the screen-grab, it seems like multiple runs with different hyper-parameters , and a parallel coordinates graph for display. This is the equivalent of running the same base experiment multiple times with different hyper-parameters and comparing the results with the Trains web UI, so far so good :)

Based on the HParam interface , one would have to use TensorFlow in order to sample from HP, usually in within the code. How would you extend this approach to multiple experiments? (it's not just automagically logging the hparams but you need to create multiple experiments, one per parameters set)

Wouldn't it make more sense to use an external optimizer to do the optimization? This way you can scale to multiple machines, and have more complicated optimization strategies (like Optuna), you can find a few examples in the trains examples/optimization.