嗨,我的场景是有一个队列,大量资源将消息放入该队列,并且许多消费者阅读消息并执行特定工作。

对于这种情况,我使用此命令在 Kafka 中创建一个主题

bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test1

现在我开发了一个 java 类来使用它

public class ConsumerGroupExample {

private final ConsumerConnector consumer;

private final String topic;

private ExecutorService executor;

public ConsumerGroupExample(String a_zookeeper, String a_groupId, String a_topic) {

consumer = kafka.consumer.Consumer.createJavaConsumerConnector(

createConsumerConfig(a_zookeeper, a_groupId));

this.topic = a_topic;

}

public void shutdown() {

if (consumer != null) consumer.shutdown();

if (executor != null) executor.shutdown();

try {

if (!executor.awaitTermination(5000, TimeUnit.MILLISECONDS)) {

System.out.println("Timed out waiting for consumer threads to shut down, exiting uncleanly");

}

} catch (InterruptedException e) {

System.out.println("Interrupted during shutdown, exiting uncleanly");

}

}

public void run(int a_numThreads) {

Map<String, Integer> topicCountMap = new HashMap<String, Integer>();

topicCountMap.put(topic, new Integer(a_numThreads));

Map<String, List<KafkaStream<byte[], byte[]>>> consumerMap = consumer.createMessageStreams(topicCountMap);

List<KafkaStream<byte[], byte[]>> streams = consumerMap.get(topic);

// now launch all the threads

//

executor = Executors.newFixedThreadPool(a_numThreads);

// now create an object to consume the messages

//

int threadNumber = 0;

for (final KafkaStream stream : streams) {

executor.submit(new ConsumerTest(stream, threadNumber));

threadNumber++;

}

}

private static ConsumerConfig createConsumerConfig(String a_zookeeper, String a_groupId) {

Properties props = new Properties();

props.put("zookeeper.connect", a_zookeeper);

props.put("zookeeper.session.timeout.ms", "400");

props.put("zookeeper.sync.time.ms", "200");

props.put("group.id", a_groupId);

props.put("num.consumer.fetchers", "2");

props.put("partition.assignment.strategy", "roundrobin");

props.put("auto.commit.interval.ms", "1000");

return new ConsumerConfig(props);

}

public static void main(String[] args) {

String zooKeeper = "tls.navaco.local:2181";

String groupId = "group1";

String topic = "test1";

int threads = 4;

ConsumerGroupExample example = new ConsumerGroupExample(zooKeeper, groupId, topic);

example.run(threads);

while (true) {}

}

和另一个用于生成消息的 java 类

public class TestProducer {

public static void main(String[] args) {

Properties props = new Properties();

props.put("metadata.broker.list", "tls.navaco.local:9092");

props.put("serializer.class", "kafka.serializer.StringEncoder");

props.put("request.required.acks", "1");

ProducerConfig config = new ProducerConfig(props);

Producer p = new Producer<String, String>(config);

//sending...

String topic = "test1";

String message = "Hello Kafka";

for (int i = 0; i < 1000; i++) {

KeyedMessage<String, String> keyedMessage = new KeyedMessage<String, String>(topic, message + i);

p.send(keyedMessage);

}

}

}

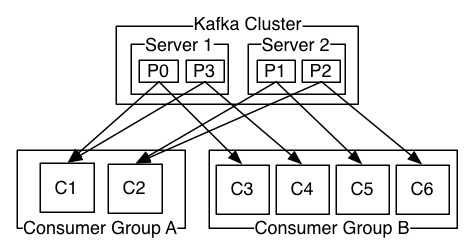

,并且正如 Apache 文档所说,如果主题想要充当队列,消费者应该有相同的 group.id,我做到了,但是当我运行 2、3 或事件更多消费者时,只有其中一个得到消息和其他人没有做任何事情。

事实上,我想要一个队列,它的排序对我来说并不重要,对我来说重要的是每条消息都只被一个消费者消费。

我想知道是否可以在 Kafka 中实现它,或者我应该使用其他产品,如 ActiveMQ、HornetMQ ......?