设想

我正在尝试对 Java GUI 应用程序中的数据集实施监督学习。用户将获得要检查的项目或“报告”列表,并将根据一组可用标签对其进行标记。一旦监督学习完成,标记的实例将被提供给学习算法。这将尝试根据用户想要查看它们的可能性对其余项目进行排序。

为了充分利用用户的时间,我想预先选择将提供有关整个报告集合的最多信息的报告,并让用户标记它们。据我了解,要计算这一点,有必要找到每个报告的所有互信息值的总和,并按该值对它们进行排序。然后,来自监督学习的标记报告将用于形成贝叶斯网络,以找到每个剩余报告的二进制值的概率。

例子

在这里,一个人为的例子可能有助于解释,并且当我无疑使用了错误的术语时可能会消除混乱:-) 考虑一个应用程序向用户显示新闻故事的例子。它根据显示的用户偏好选择首先显示哪些新闻报道。具有相关性的新闻故事的特征country of origin是category或date。因此,如果用户将来自苏格兰的单个新闻故事标记为有趣,它会告诉机器学习器,来自苏格兰的其他新闻故事对用户感兴趣的机会增加。类似于 Sport 等类别或 2004 年 12 月 12 日等日期。

可以通过为所有新闻故事选择任何顺序(例如,按类别、按日期)或随机排序它们,然后在用户进行时计算偏好来计算这种偏好。我想做的是让用户查看少量特定新闻故事并说出他们是否对它们感兴趣(监督学习部分),从而在该排序上获得一种“领先优势”。要选择向用户展示哪些故事,我必须考虑整个故事集合。这就是互信息的用武之地。对于每个故事,我想知道当它被用户分类时,它可以告诉我多少关于所有其他故事的信息。例如,如果有大量来自苏格兰的故事,我想让用户(至少)对其中一个进行分类。其他相关特征(例如类别或日期)也类似。目标是找到在分类时提供关于其他报告的最多信息的报告示例。

问题

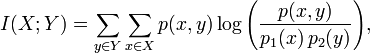

因为我的数学有点生疏,而且我是机器学习的新手,所以在将互信息的定义转换为 Java 实现时遇到了一些麻烦。维基百科将互信息的方程式描述为:

但是,我不确定这是否真的可以在没有分类的情况下使用,并且学习算法还没有计算任何东西。

在我的示例中,假设我有大量新的、未标记的此类实例:

public class NewsStory {

private String countryOfOrigin;

private String category;

private Date date;

// constructor, etc.

}

在我的具体场景中,字段/特征之间的相关性是基于精确匹配的,因此,例如,一天和 10 年的日期差异在它们的不等式上是等效的。

The factors for correlation (e.g. is date more correlating than category?) are not necessarily equal, but they can be predefined and constant. Does this mean that the result of the function p(x,y) is the predefined value, or am I mixing up terms?

The Question (finally)

How can I go about implementing the mutual information calculation given this (fake) example of news stories? Libraries, javadoc, code examples etc. are all welcome information. Also, if this approach is fundamentally flawed, explaining why that is the case would be just as valuable an answer.

PS. I am aware of libraries such as Weka and Apache Mahout, so just mentioning them is not really useful for me. I'm still searching through documentation and examples for both these libraries looking for stuff on Mutual Information specifically. What would really help me is pointing to resources (code examples, javadoc) where these libraries help with mutual information.